Evolution of Natural Language Understanding in Robotics

The evolution of natural language understanding in robots marks a significant leap in how humans and machines communicate. This advancement is pivotal for enhancing user experience in various applications, including smart homes and personal assistants.

Importance of Natural Language Processing in Robots

Natural Language Processing (NLP) plays a crucial role in enabling robots to understand and interpret human language. By leveraging NLP, robots can process voice commands, answer questions, and engage in conversations more naturally. This capability is essential for creating robots that can interact seamlessly with users in diverse environments.

| Aspect | Description |

|---|---|

| Understanding Commands | Robots utilize NLP to accurately comprehend and execute voice commands provided by users. |

| User Interaction | Improved NLP allows for more fluid and engaging interactions between humans and robots. |

| Language Flexibility | Robots can adapt to different dialects and speech patterns, increasing accessibility. |

The demand for intuitive voice interfaces has led to innovations in NLP, making it a fundamental technology for modern robotic systems. For more details, see our article on robot voice recognition and nlp.

Advancements in Voice Recognition Technology

Voice recognition technology has advanced considerably, improving the ability of robots to recognize and interpret spoken input accurately. Modern systems employ sophisticated algorithms that can analyze speech in real-time, leading to enhanced performance in various contexts.

| Improvement | Impact |

|---|---|

| Increased Accuracy | Modern voice recognition systems achieve over 90% accuracy in understanding spoken language. |

| Speed of Processing | Real-time processing capabilities allow robots to respond to commands almost instantly. |

| Adaptability | Advanced technologies allow robots to learn from user interactions, enhancing their ability to recognize individual speech patterns over time. |

With advancements in voice recognition, robots can better understand nuances like accents and dialects. Research into training robots to understand accents plays a vital role in this area.

These developments in natural language understanding in robots not only improve their responsiveness but also foster more engaging human-robot interactions, paving the way for more widespread adoption of robotic technologies in everyday life. For further reading on conversational abilities, check out our article on conversation capabilities in robots.

Contextual Understanding in Robots

As robots evolve, their ability to engage in natural conversations greatly relies on contextual understanding. This enables them to comprehend subtleties in communication, allowing for more fluid interactions with humans.

How Robots Grasp Meaning from Context

Robots utilize various methods to extract meaning based on context. Contextual understanding involves analyzing the surrounding information during conversation, which helps clarify ambiguous phrases and commands. By incorporating this capability, robots can enhance their effectiveness in addressing user requests.

The following factors play a significant role in how robots comprehend context:

| Factor | Description |

|---|---|

| Previous Interactions | Robots can refer back to previous exchanges in a conversation to gain insights into user preferences and interests. |

| Environment | The physical surroundings provide clues that may impact the robot’s understanding. For example, the presence of household items may signal specific tasks. |

| Tone and Emotion | Robots can analyze tone to infer feelings or urgency, allowing for responsive and empathetic interactions. |

| Cultural References | Knowledge of cultural context enables robots to interpret idioms or colloquialisms accurately. |

Using these contextual indicators, robots can bridge gaps in communication and respond appropriately, thereby improving user satisfaction and interaction.

Utilizing Contextual Cues for Enhanced Interaction

In addition to understanding context, robots leverage contextual cues to enhance interactions with users. These cues can manifest through various elements:

-

Visual Cues: Cameras and sensors help robots recognize gestures or facial expressions, which can guide their responses.

-

Voice Modulation: By analyzing the pitch or intensity of a user’s voice, robots modify their responses to convey understanding and engage in more meaningful dialogue.

-

Situational Awareness: Robots equipped with situational awareness can detect changes in their environment, allowing them to adapt their actions accordingly. This awareness can be crucial in smart home settings, where tasks might vary depending on time or occupancy.

-

User History: Keeping track of personal preferences enables robots to customize interactions based on individual user behavior. For instance, if a user frequently asks for weather updates in the morning, the robot may proactively provide this information.

table detailing interaction cues can summarize their impacts effectively:

| Interaction Cue | Impact on Communication |

|---|---|

| Visual Cues | Improves understanding of user emotions and intentions. |

| Voice Modulation | Creates a more engaging and relatable interaction. |

| Situational Awareness | Allows robots to perform relevant tasks in real-time. |

| User History | Personalizes interactions for enhanced user experience. |

By effectively using these contextual cues, robots can significantly improve their natural language understanding in robots capabilities. This advancement fosters better relationships between users and robotic systems, ultimately paving the way for more intuitive technologies. For more information about how robots can recognize voice and improve conversations, visit our articles on robot voice recognition and nlp and conversation capabilities in robots.

Tone and Emotion Recognition

Understanding tone and emotion is a critical component of natural language understanding in robots. Effective interaction depends not only on the content of the spoken words but also on how they are delivered.

How Robots Interpret Tone of Voice

Robots utilize advanced algorithms to analyze the tone of voice during human interactions. This involves parsing various elements, including pitch, volume, and rhythm. By discerning these vocal attributes, robots can infer the emotional state of the speaker. For example, a high-pitched voice might indicate excitement or distress, whereas a lower pitch could suggest calmness or authority.

The following table summarizes common tones and the emotions they may represent:

| Tone of Voice | Possible Emotion |

|---|---|

| High-Pitched | Excitement, Nervousness |

| Low-Pitched | Calmness, Authority |

| Fast Pace | Anxiety, Urgency |

| Slow Pace | Relaxation, Sadness |

Implementing voice recognition technology allows robots to enhance their interactions with users. By recognizing emotional cues, robots can respond more appropriately, creating a more engaging experience for the user. This capability is integral to developing advanced communication, as detailed in our article on robot voice recognition and nlp.

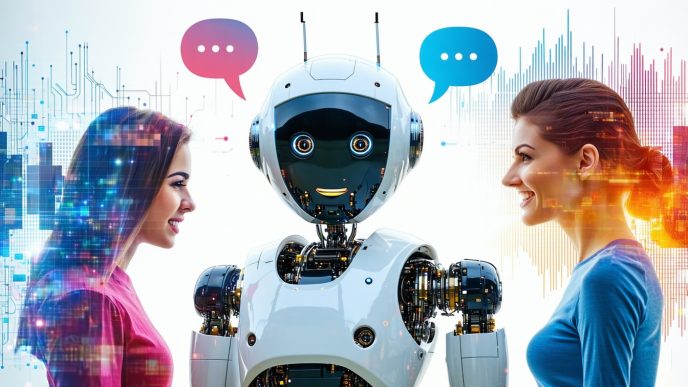

Importance of Emotion Recognition in Human-Robot Interaction

Emotion recognition is essential for ensuring effective communication between humans and robots. When robots can identify and appropriately respond to a user’s emotional state, it fosters a more natural and empathetic interaction. This is particularly important in scenarios where robots serve as companions or assistants in smart homes.

The table below outlines the benefits of effective emotion recognition:

| Benefit | Description |

|---|---|

| Improved User Trust | Understanding and responding to emotions builds confidence in the robot’s capabilities. |

| Enhanced User Satisfaction | Emotion-aware responses contribute to a more pleasant experience. |

| Better Adaptability | Robots can modify their behavior based on user emotions to create a personalized experience. |

For families using robots in everyday environments, the ability to comprehend emotional context significantly enhances usability. Adapting to user’s feelings can lead to a more harmonious living situation, as discussed in our feature on robot responses and personality design.

As technology advances, the integration of emotion recognition into robotics continues to evolve, promising exciting trends in how robots interact with their human counterparts. For more insights into the future of this technology, see our piece on future of voice interaction with robots.

Natural Language Understanding Algorithms

Natural language understanding is a pivotal element that enables robots to interact with humans effectively. At the core of this functionality are algorithms that leverage machine learning and neural networks. This section will explore how these technologies enhance robotics’ ability to comprehend language.

Machine Learning and NLP in Robotics

Machine learning (ML) plays a significant role in natural language processing (NLP) for robots. With ML, robots can learn from vast amounts of data to improve their understanding of language over time. This learning process involves training algorithms on diverse datasets that include various language structures, commands, and intents.

In the arena of NLP, understanding context and semantics is essential. Machine learning models can analyze sentences, break them down into parts, and recognize patterns. This ability enables robots to process voice commands accurately and respond appropriately, enhancing user interaction.

| Machine Learning Components | Description |

|---|---|

| Algorithms | The rules and processes that guide the learning and decision-making. |

| Datasets | Collections of text or voice samples used for training. |

| Models | Representations of learned patterns that help in predicting responses. |

For further insight into how robots utilize voice recognition technology, you can refer to our article on robot voice recognition and nlp.

Neural Networks for Enhancing Language Comprehension

Neural networks are a subset of machine learning algorithms specifically designed for processing data in a way that mimics the human brain. In the context of robotics, neural networks help in enhancing language comprehension by enabling robots to parse language at varying levels of complexity.

These networks can be structured in different ways to address various challenges in natural language understanding. For instance, recurrent neural networks (RNNs) are particularly useful for processing sequences of data, such as sentences. They retain information about earlier words while predicting future words, improving the robot’s ability to grasp the meaning of longer or more complex commands.

| Neural Network Types | Advantages |

|---|---|

| Recurrent Neural Networks (RNNs) | Good for sequential data, understanding context over multiple words. |

| Convolutional Neural Networks (CNNs) | Effective in understanding language from a more visual standpoint, commonly used for image recognition but adaptable for text. |

| Transformers | Excel in handling long-range dependencies, commonly used in modern NLP tasks. |

Robots equipped with neural networks can process and generate human-like responses, leading to more natural conversations. This capability not only improves interaction quality but also aids in task execution, making robots more intuitive.

For a closer look at how robots manage conversation capabilities, check our article on conversation capabilities in robots. With advancements in natural language understanding in robots, there is a growing integration of these technologies into our everyday devices, changing how users interact with their robotic companions.

Challenges in Natural Language Understanding

Natural language understanding in robots presents several significant challenges as developers strive to create systems that communicate effectively with humans. These challenges primarily stem from the inherent complexities of human language.

Ambiguity in Language

Language can often be ambiguous, leading to multiple interpretations of a single command or question. This can confuse robots that rely on precise instructions to function effectively. The presence of homonyms, idioms, and nuances in meaning can make it difficult for a robot to accurately grasp the intended message.

Here are some examples of ambiguous phrases that may pose challenges to robots:

| Phrase | Possible Interpretations |

|---|---|

| “I saw her duck.” | “I saw her lower her head” vs. “I saw her pet duck.” |

| “Can you can a can as a canner can can a can?” | Play on words leading to confusion. |

| “That was a great show.” | Could refer to a performance, a TV show, or a spectacle. |

Robots need advanced algorithms to discern context and disambiguate language based on the surrounding cues. For a deeper understanding of how robots interpret voice, consult our article on robot voice recognition and nlp.

Handling Complex Commands and Queries

The ability to process complex commands is crucial for natural language understanding in robots. Humans often issue multi-part commands or questions that require the listener to parse various elements and respond appropriately. This complexity can overwhelm robotic systems that are still developing their language processing capabilities.

Consider these examples of complex commands:

| Command | Complexity Level |

|---|---|

| “Turn off the living room lights and play relaxing music.” | High |

| “Set a timer for 10 minutes and remind me to check the oven.” | High |

| “What’s the weather like today and can you tell me a joke?” | Medium |

These commands require robots to not only comprehend multiple instructions at once but also to prioritize actions effectively. To enhance their ability to handle such complexities, developers deploy sophisticated natural language processing techniques and machine learning algorithms. More about improving interaction can be found in our discussion on conversation capabilities in robots.

Efforts to enhance natural language processing continue, focusing on making robots better equipped to address ambiguity and complex requests efficiently. Understanding these challenges is essential for improving human-robot interaction and designing more capable systems. For insights into training robots to better understand different speech patterns and quirks, see training robots to understand accents.

Applications of Natural Language Understanding in Robotics

Natural language understanding (NLU) in robotics has paved the way for innovative applications, greatly enhancing the interaction between humans and machines. Two prominent areas where NLU is making a significant impact are smart home integration and personal assistants/companion robots.

Smart Home Integration

The integration of robotics in smart homes demonstrates how NLU can transform everyday environments. Robots equipped with NLU capabilities can understand and execute voice commands, enabling seamless control of home automation systems. These systems allow users to manage lights, security, heating, and more using simple verbal instructions.

| Feature | Description |

|---|---|

| Voice Control | Users can issue commands to control smart devices, like “turn on the living room lights.” |

| Context Awareness | Robots interpret user preferences and past interactions to make informed suggestions. |

| Device Management | Capability to manage multiple smart devices through a unified interface. |

By employing robot voice recognition and nlp, these systems can enhance user convenience and efficiency, making daily routines more manageable. Integration with other smart home devices can lead to a cohesive environment where everything functions in harmony.

Personal Assistants and Companion Robots

Another significant application of NLU is in personal assistants and companion robots. These robots are designed to assist users with daily tasks, provide companionship, and facilitate communication. By utilizing NLU, they can understand complex inquiries and engage in meaningful dialogue.

| Functionality | Description |

|---|---|

| Task Management | Robots can schedule appointments, set reminders, and answer questions about tasks. |

| Emotional Support | Capable of recognizing and responding to emotional cues to offer comfort and companionship. |

| Adaptive Learning | These robots learn user preferences over time, enhancing their interaction and responsiveness. |

Through advancements in conversation capabilities in robots, personal assistants and companion robots offer a more natural interaction experience. They can respond to user inquiries and requests in a way that feels personalized and intuitive.

The applications of natural language understanding in robotics showcase significant advancements that have the potential to improve daily life. As technology continues to evolve, the integration of NLU into everyday robotic systems will likely increase, bringing new functionalities to users. Those interested in the future of such interactions should explore topics such as future of voice interaction with robots.

Future Trends in Natural Language Understanding

As technology continues to evolve, so does the capacity for natural language understanding in robots. This section discusses two prominent trends shaping the future: voice-activated IoT devices and conversational AI for enhanced user experience.

Voice-Activated IoT Devices

Voice-activated Internet of Things (IoT) devices represent a significant advancement in the integration of robotic technology into everyday life. These devices enable users to control household functions through voice commands, making interactions more intuitive and accessible.

The increasing complexity of tasks that these devices can perform highlights the improvements in natural language processing algorithms. Users can issue commands related to various functions, such as adjusting lighting, controlling temperature, and even managing security systems.

The following table illustrates the progression of voice-activated IoT capabilities:

| Year | Features |

|---|---|

| 2015 | Basic command recognition |

| 2017 | Enhanced task-specific functionalities |

| 2020 | Contextual understanding of multi-step commands |

| 2023 | Seamless integration with AI for personalized interactions |

With advancements in voice recognition technology, robots now have the ability to understand context and respond accurately to complex requests. For further exploration of voice recognition capabilities, refer to our article on robot voice recognition and nlp.

Conversational AI for Enhanced User Experience

Conversational AI is another transformative trend, making interactions with robots more natural and engaging. This technology employs advanced algorithms to facilitate two-way communication, allowing users to hold more meaningful conversations with robots.

The ability of robots to not just interpret commands but also engage in dialogue reflects improvements in language comprehension and emotional recognition. Conversational AI can analyze the user’s intent and respond accordingly, enhancing the overall user experience.

| Application | Key Features |

|---|---|

| Personal Assistants | Context-aware dialogue, personalized responses |

| Companion Robots | Emotion recognition, adaptive conversation styles |

This advancement not only increases the effectiveness of voice interactions but also builds a stronger connection between users and their robotic companions. For deeper insights into conversational capabilities, check our article on conversation capabilities in robots.

Robotics enthusiasts and smart home users can expect that as natural language understanding in robots continues to progress, the technology will become even more responsive and adaptable to individual user needs. The future of voice interaction is bright, and it promises to redefine how humans and robots coexist. Explore more about this exciting future in our article on future of voice interaction with robots.